The Whole Earth Codec is a foundation model that transforms planetary-scale, multi-modal ecological data into a single knowledge architecture

Traditional models of the observatory have focused on gazing outward, towards the cosmos. The recent proliferation of planetary sensor networks has inverted this gaze, forming a new kind of planetary observatory that takes the earth itself as its object. Could we cast the entire earth as a distributed observatory, using a foundation model to compose a singular, synthetic representation of the planet? The current generation of models primarily deal with human language, their training corpus scraped from the detritus of the internet. We must widen the aperture of what these models observe to include the non-human.

The Whole Earth Codec is an autoregressive, multi-modal foundation model that allows the planet to observe itself. This proposal radically expands the scope of foundation models, moving beyond anthropocentric language data towards the wealth of ecological information immanent to the planet. Moving from raw sense data to high-dimensional embedding in latent space, the observatory folds in on itself, thus revealing a form of computational reason that transcends sense perception alone: a sight beyond sight. Guided by planetary-scale sensing rather than myopic anthropocentrism, the Whole Earth Codec opens up a future of ambivalent possibility through cross-modal meta-observation, perhaps generating a form of planetary sapience.

Inverting the Observatory

On April 10, 2019, the Event Horizon Telescope produced the first ever “image” of a black hole. To do so, a global network of telescopes together formed a camera whose aperture spanned the width of the planet. In contrast to earlier observatories, this planetary-scale observatory enables a form of sight that transcends the bounded locality of the site: a sight beyond site.

This planetary vision is only possible via the synthetic operations of computation. Computation transforms raw sense data from the distributed observatory into inductive reasoning, producing a form of sight that transcends the immediate act of seeing: a sight beyond sight.

Planetary-scale computation decouples observation from the observer. Like the Event Horizon Telescope, the recent proliferation of terrestrial sensor networks renders the entire Earth a giant observatory.

Rather than looking outwards towards the cosmos, these sensors invert the gaze, taking the Earth as their object of observation.

Fragmented sensors have been deployed to sense the planet, but less attention has been paid towards aggregating and analyzing this data at planetary-scale. Realizing the full potential of this distributed observatory requires both the sensory mechanisms for gathering data and the computational mechanisms for processing it.

Foundation models may enable this synthesis. Trained on vast amounts of data via unsupervised learning, foundation models accumulate a body of general knowledge that can then be fine-tuned for downstream tasks.

Despite their massive scale, the training corpus of existing foundation models reflects a mere fraction of possible data, scraped from the detritus of the internet. Language, let alone human culture, is only a subset of the wealth of information immanent to the biosphere. The aperture of what these models observe should widen to include the ecological and the nonhuman.

There is nothing, however, that limits potential foundation models to text alone. The planet produces stimuli in the form of energy, particles, waves, and fields. Transduced by machine sensors, these signals provide a potential multi-modal input for models.

Current foundation models demonstrate emergent capabilities derived from hidden associations within the vastness of their training data. Integrating multi-modal data from the biosphere into a single knowledge architecture might enable an emergent planetary intelligence, bypassing anthropocentric biases to discover new resonance across modalities.

Enter the Whole Earth Codec, a foundation model that integrates myriad streams of ecological data from the Earth and allows the planet to observe itself.

Folding the Gaze

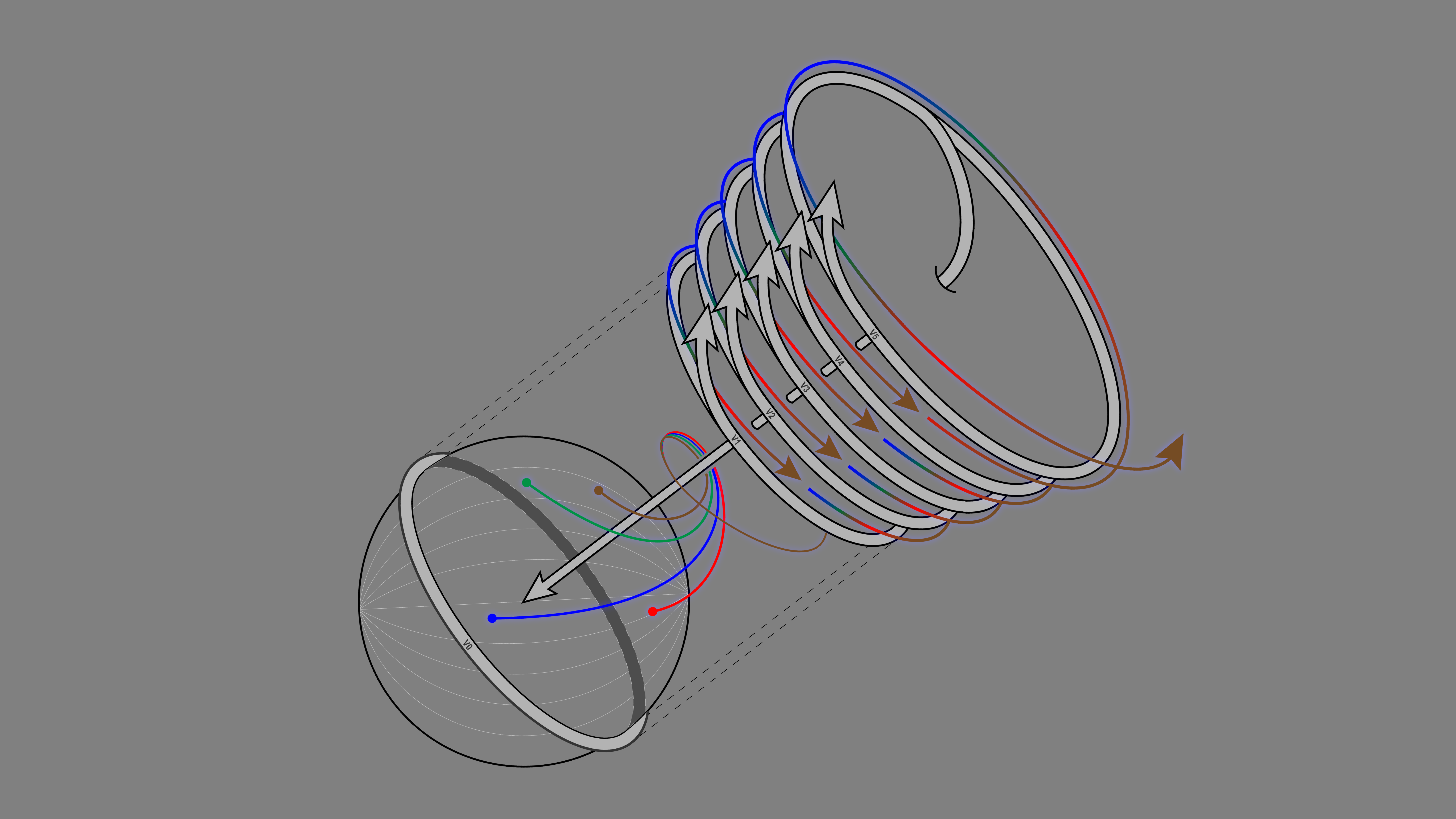

Central to transformer architecture, which underpins the large language models of today, is the self-attention mechanism. The model learns the importance of each token in a given sequence relative to others, computing the “attention” it should pay and forming a contextualized representation of the sequence.

When the distributed observatory of the Whole Earth Codec inverts the planet’s gaze, it begins an analogous process of self-observation. Just as a transformer learns to pay attention to parts of its input sequence, the Codec learns to observe important cross-modal qualities of the planet. It allows the Earth to observe itself observing itself.

Through this recursive process, the Codec learns to capture dependencies between its myriad inputs. It synthesizes multiple modalities and detects hidden patterns within them. This is not the panopticon-like surveillance of an external subject, but rather the observation of a model turned inwards.

This internal observation is made possible by the dual operations of encoding and embedding. Encoding the syntactical structure of input data via the assignment of tokens unites a variety of sensory inputs within a shared representational space. Bioacoustic audio data can be compared to atmospheric pollutants, for example, due to the fact that both can be understood as possessing patterns, also known as syntax, which are then encoded as tokens. This encoding renders any phenomenon computable.

The act of embedding, wherein tokens are mapped to high-dimensional vector representations, allows for the discovery of hidden patterns. In the case of LLMs, abstract concepts such as tone or sentiment are detected in the high-dimensional topology of the embedding space. “Semantic ascent” occurs via the passage through the subsequent layers of the neural net.

What currently imperceptible, high-level concepts might emerge from embedding the biosphere? Observation moves its gaze away from data alone, towards syntactical relations in high-dimensional latent space. The observatory folds inward, enabling a form of computational reason that transcends the immediacy of sense perception: sight beyond sight.

Assembling the Codec

The Whole Earth Codec is an autoregressive foundation model trained across multiple modalities, which enables comprehension across disparate forms of data and allows an expansive planetary intelligence to emerge.

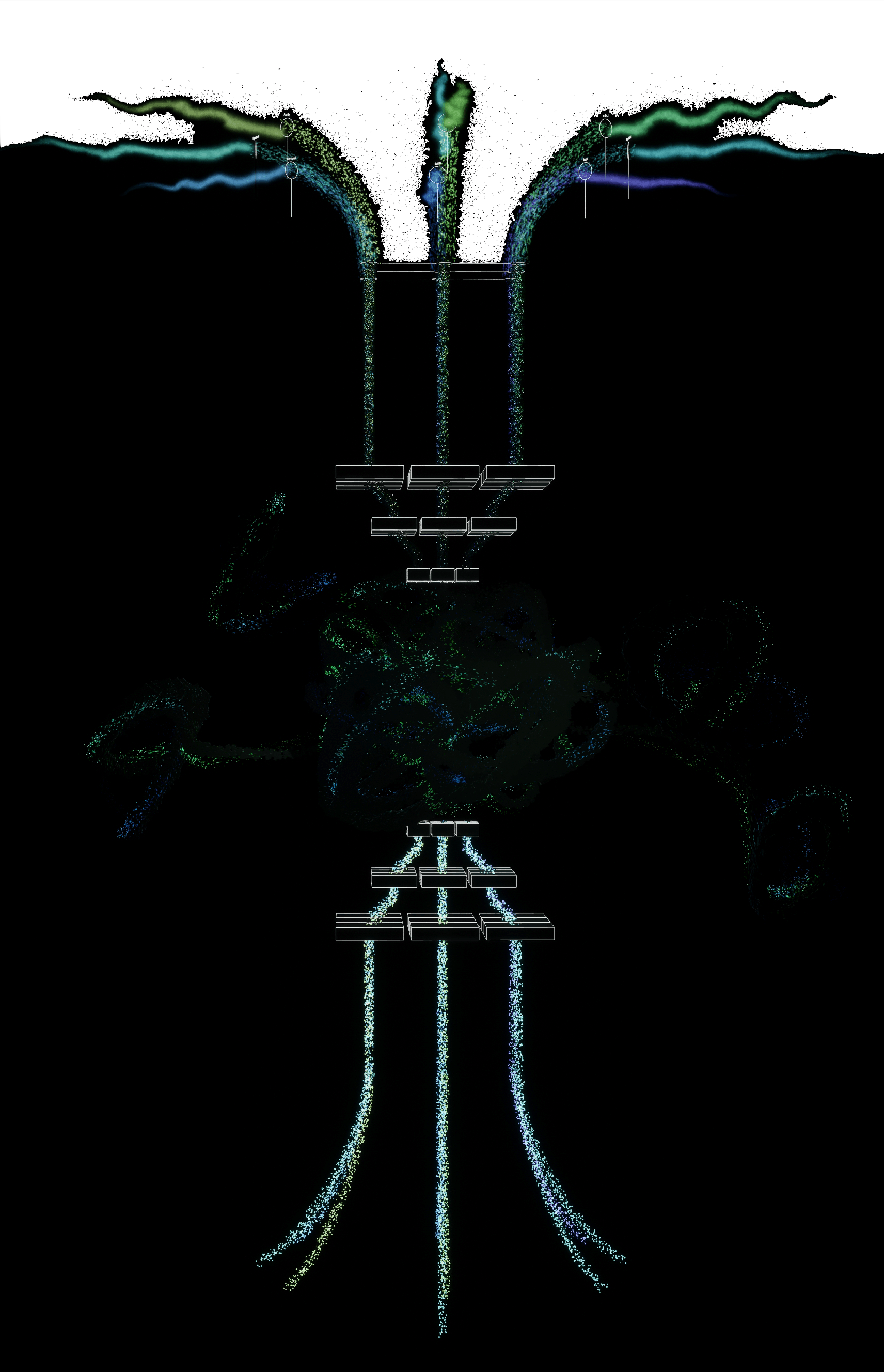

Sensing Layer

The sensing layer is where the multi-modal data of the biosphere is transduced, recorded, and digitized. Its topology is a distributed mesh network containing federated edge devices and regional data centers.

Foundation Model

Unlike a digital twin, which constructs a mimetic representation of its subject, the Codec uses computational abstractions to access information about the planet that cannot be directly perceived. These abstractions are produced by aggregating sense data within a shared knowledge architecture: the foundation model.

Sensors

Each edge device might consist of different sensors receiving different types of stimuli: image, audio, chemical, lidar, pressure, moisture, magnetic fields. Forms of data produced are just as broad as the forms of sensing.

Federation

Despite processing vast amounts of data, sensitive information is protected through structured transparency. Because of federated learning, the data never leaves the device. Instead, learned weights are pushed to regional data centers.

Spatiotemporal Anchoring

Regardless of modality, a UTC timestamp and GPS satellite signal is attached to each sample. This anchoring allows the model to make associations based on temporal and spatial correlation across modalities.

Encoders

Foundation models are pre-trained on a massive corpus of unsupervised data, and the Whole Earth Codec is no different. Separate encoders are trained for each type of data. These encoders transform disparate, multi-modal forms of input into dense, high-dimensional embeddings within a single cross-modal latent space.

Latent Space

Through contrastive learning, the model projects temporally and spatially correlated data into nearby embeddings within the space. The latent space folds and refolds, forming a composite topology of the biosphere.

Fine-Tuned Models

Leveraging the pre-trained baseline, fine-tuning uses a smaller, labeled dataset to update model weights, often for specific capabilities or to address domain shift. The Codec forms the substrate for a rich ecosystem of third-party, fine-tuned models with improved performance on downstream tasks.

Decoders

Decoders of different modalities are then trained by translating the embeddings into sequence predictions. Due to the massive scale of input, the model only makes a single pass over available data.

Post-Codec Futures

The Codec’s emergent capabilities will act back upon the planet which produced it, remaking it in mundane and transformative ways. While actual capabilities are yet unknown, we speculate upon potential second-order effects.

Generative capacities. Foundation models possess generative capacities, extrapolating from their training data to envision new possibilities. The Codec could leverage these capacities to generate a weather pattern that increases crop yield or a synthetic bacterial-resistant genome. What is the recipe for a forest? Or for a bioweapon?

Mutually assured transparency. The same mechanism that enables these unpredictable forms of generation could also be used for their prevention through mutually assured transparency. Entities across the planet can monitor aggressors or allies equally. Carbon emissions, gene editing, and water contamination can be detected and regulated.

Future of risk. As previously unknown correlations between planetary cause-and-effect are revealed, risk, litigation, and insurance industries will respond accordingly. Responsibility will become more traceable; high-resolution blame will need to be assigned. What new forms of paranoia will omniscient awareness of ecological processes induce?

Human-nonhuman interface. Bypassing anthropocentric notions of translation and communication, the Codec can be reconceptualized as an interface mediating human/nonhuman relations via high-dimensional computational abstraction. It moves us beyond goals like translating whale speech into human speech, towards a more general understanding of non-semantic mediation through syntactic similarities.

Biospheric hallucinations. The hallucinations observed in LLMs, where a statement appears structurally correct but is factually inaccurate, will likely also be present in the Codec. Biospheric hallucinations could include false declarations about the presence of a new genome, or the correlation between rainfall and particulate matter. Knock-on effects from outputs that turn out to be nonsensical may erode trust in the Codec.

These futures are made possible by planetary-scale sensing and computing, directed beyond the domain of the human toward the broader domain of the ecological, of which the human is merely a subset.

The Codec allows the Earth to assemble higher levels of biospheric comprehension through computation. It enables cross-modal synthesis through topological analysis of matching syntax. It forms concepts that are not wholly constructed by humans alone. It actively reshapes the earth rather than passively modeling it.

Through the Whole Earth Codec, modes of observation are distributed, inverted, and folded. The earth can observe itself beyond direct perception alone, towards a more expansive planetary comprehension.

The Whole Earth Codec holds massive potential for public good by allowing a deeper understanding of ecological systems. As a foundation model, it makes wide-ranging downstream applications including assessing ecosystems, altering complex weather patterns, and predicting natural catastrophes possible.

Such potential also makes effective governance of the Codec critical. With high reward comes high risk: beyond its benefits, dual use could proliferate environmental extraction or new bioweaponry. As a planetary-scale machine intelligence, the WEC requires an expansive new conception of governance that is unlike the existing institutions we have in place. Old intuitions about governance become less useful. Governance of this knowledge infrastructure must emerge from the Codec's infrastructure and technical mechanisms, take into account the planet as a stakeholder rather than humans alone, and involve machine intelligence self-governing its own evolution.

The following sections contain guiding principles that inform the proposals for governing the Whole Earth Codec. These principles move us past trading-off existing binaries and instead suggest a new idea of governance as machinic, emergent, and integrated into the wider ecosystem. A map of the governance space of the Codec follows, divided into key ideas around governing infrastructure, information flow, resourcing, and evolution. Just as the Whole Earth Codec provides a previously nonexistent sensing-and-acting apparatus for the biosphere, its governance must also be developed anew.

Governing Principles

While there exist no true precedents of planetary-scale ecological governance, extant systems for governing e.g. the Internet or the Event Horizon Telescope offer valuable design principles that may be applied to the governance of the Codec.

The governance mechanisms of the Whole Earth Codec should

- Harness collective intelligence by integrating, rather than flattening, conflicting ideas

- Provide transparency in service of accountability

- Negotiate both local and planetary scales

- Resist extractivist logics and private capture

- Enable distributed maintenance

- Be aligned with long-term goals of humans and nonhumans alike

- Adapt and evolve in concert with shifting contexts

- Integrate the capacity of the Codec itself for simulation and prediction

The Governance Space

To make sense of the massive space of what governing the Whole Earth Codec includes, the following section partitions it into infrastructure, information flow, resourcing, and evolution. Infrastructure refers to how the physical components invoke a particular type of distributed governance. Information flow refers to how sensitive information is controlled through technical mechanisms. Resourcing refers to how the energy, materials, labor, and capital required for operating the WEC come to be. And evolution refers to how the processes by which the Codec iterates, expands, and modifies itself are changed.

In each space, we propose a key idea which expands existing notions of governance, ultimately making the conditions for governing the Whole Earth Codec possible.

Infrastructure

Across the planet exists an extensive array of sensor networks and data centers, each originally established to address specific domain objectives. The Whole Earth Codec harnesses these once-siloed hardware resources (including sensors, GPUs, chips, cooling systems, cables, and facilities) into a globally interconnected and collaborative framework, laying the foundation for a planetary cognitive infrastructure.

The Whole Earth Codec enables a cooperative model of hardware anchored in various locations, consisting of a diverse range of data types and modalities, that facilitates the collection, processing, and exchange of data across domains and serves as the backbone for a planetary foundation model to operate at scale. Each interconnected node contributing to the network extends the model’s capabilities while providing incentives to each contributor to tap into the computational vigor of the hardware infrastructure and generative capacities of the model that no single domain could have fostered on its own.

The initial stages in building out the infrastructure deliberately rely on already available sites with existing operations in the field, and thus require cross-domain membership of the Whole Earth Codec. Members are network operators and are not governed by a single, centralized authority but instead, a collaborative effort involving various organizations, institutions, countries, and even individuals. The governance and coordination of the global infrastructure that the Whole Earth Codec is built upon are achieved through a combination of international agreements, partnerships, and shared standards.

Information Flow

Typical analysis of technical privacy of systems uses “access” to describe gating information about data, code, or model weights, but thinking of it as “information flow” instead allows a more fluid approach beyond a closed-or-open binary. Information flow thus refers to who knows what, and what they can do with the knowledge. In the Whole Earth Codec, information flow is controlled through privacy-enhacing technologies that allow computation while protecting sensitive information and preventing single-party knowledge over its entirety. No single datacenter contains the entirety of the Codec: training, weights, and inference is distributed across datacenters and each node requires others to operate.

Privacy-enhancing technologies. Technical mechanisms allow us to move past binaries of transparent vs. opaque, open vs. closed, instead creating a paradigm of knowledge generation without individual access to the totality of data.

Multi-party computation. Each datacenter is blinded to the information of the others, but techniques such as multi-party computation allow them to perform computation on their shared data together.

Differential privacy. Individuals are protected through numbers as their data is aggregated into statistical information about a large grouping.

Networked communications. The WEC thus exists not at any individual node but in the web of their networked communications. The information flow of the WEC preserves fine-grained privacy and instead enforces collective communication; without the greater network, no individual sensor or datacenter could compute the knowledge emerging from the Codec. Its mechanisms enforce a collaborative computation that is neither opaque nor transparent.

Resourcing

The Whole Earth Codec is not an external system imposed upon the planet, but rather an integrated planetary infrastructure produced by and through the earth itself. “Planetary” here refers not just to the scale of the infrastructure but also to the earthbound physical, mental, and economic resources of which the system is constructed. Flows of energy, materials, capital, and human labor are required to build and maintain the codec in perpetuity, and these should remain well under ecological limits to ensure a regenerative and sustainable resourcing mechanism.

Energy. Training a planetary-scale foundation model is energy intensive. Training tasks are decentralized so as not to put strain on any particular region; this decentralization is intentionally unequally distributed, in order to prioritize regions with cleaner grids and more robust economies.

Materials. The physical infrastructure of the Codec relies on a range of material resources extracted from the earth. Care should be given to where these resources are sourced from; scaling up the system should not come at the expense of particular regions, especially the low- and middle- income countries that are particularly exploited by current extractivist logics.

Capital. The Codec requires a large upfront investment, and then comparatively little financial resources for its ongoing maintenance and evolution. While the Codec must initially be funded in a market-driven capitalist economic framework, the long-term aim is to decouple the financial resourcing of the Codec from the whims of the market.

Funding sources. Capital could come in many forms:

- Levy on on downstream applications making use of the codec (i.e. a 1% levy on any product, application, etc... developed using the Codec)

- Carbon tax provides initial investment that doubles as reparative mechanism

- Donation / investments from existing institutions, funds, governments

- Insurance / risk as financial instruments that prioritize preventative measures in the present

Fund/Trust. Capital streams are pooled in a fund or trust, designed to benefit a particular purpose (e.g. planetary well-being) rather than a particular person. This body functions as the mechanism for the gathering, governance, and reallocation of the financial resources required to support the Codec.

Fund Governance. Proportions of funds could be earmarked to serve particular ends (e.g. 5% of funds dedicated to AI safety research), allocated to repair existing planetary inequalities (e.g. allocated inversely proportional to country GDP), or allocated in the form of microgrants to develop new functionalities and use cases for the Codec. These priorities and allocations should be decided using collective intelligence mechanisms for opinion polling and information gathering (e.g. quadratic voting, liquid democracy, retroactive funding), as well as the intelligence of the model itself.

Labor. The ongoing maintenance and evolution of the Codec relies on ongoing human labor and ingenuity. This labor is both intellectual and casual, much in the way that the internet is maintained by a mix of technical protocol maintenance, as well as the casual input of everyday users. Technical maintenance and feature implementation will likely be completed by a small group of dedicated experts. A smart-contract enabled guild might allocate funds to individual developers to incentivize maintenance and development tasks. Bug bounties might incentivize the discovery and repair of bugs, or be expanded to tackle deeper challenges such as safety and bias reduction.

Evolution

Finally, governance of the WEC is concerned with the processes by which the codec modifies itself, how it iterates over time: its evolution. How does it expand its sensor network, change its code, withdraw from mistaken advances? The human component of its development is modified through an open-source community of volunteers, with funding mechanisms motivating long-term contribution, but ultimately humans are only a subset of the stakeholders within its evolution. As the WEC is infrastructure of planetary comprehension, its evolution must also be determined by ecological agents, the wider biosphere.

Integrating the planet into decisions around evolution is made possible by different mechanisms for goal-setting and self-governance. Plural voting that involves nonhuman stakeholders can be used for fund allocation towards long term development goals; rather than an individual vote, each party has multiple “credits” it can distribute for a more nuanced value signal.

The machine intelligence of the WEC becomes capable of self-governing and reconstituting itself. It can generate optimal protocols for sending data between datacenters and sensors, over hand-crafted ones. It can learn where to send data to balance anonymizing the content while aggregating relevant data together at different scales. It can even sense blind spots in its own sensor network and determine coordinates to place new sensors.

For any evolution to its system, the WEC can simulate its impact on planet, comparing different futures with one another. Ultimately, this planetary sensing-and-acting apparatus becomes the means by which the biosphere is able to have agency over itself, compared to previous solely human intuitions about modifying the Earth.

As a machinic interface between humans and nonhumans, the Whole Earth Codec creates a paradox of interdependence: it at once removes humans from the loop, creating a nonhuman-machine fusion that is capable of self-regulating, yet this becomes instrumental in forming a deeper interdependence between humans and nonhumans as symbionts.

Removing humans from their position as top-down stewards of the planet—making them to relinquish myopic control over earth systems—creates an opportunity for us to relearn intuitions of relating to the ecologies around us. We have to learn to tune in to the biosphere anew. In the nascent symbiosis of human, nonhumans, and machine, what new roles does each party play? What new forms of relation arise?

Nonhumans & the Whole Earth Codec

Humans are deeply entangled with the planet. The centuries following industrialization, however, reveal a willful ignorance of this fact: humans have unidirectionally exacted their needs on the planet with little regard for downstream costs. The systematic extraction and manipulation of the environment through technological means has terraformed the planet in the image of one species, at the expense of all others. Recent extreme weather and rapid extinction caused by rising temperatures make this entanglement impossible to ignore any longer.

This resurgent awareness of entanglement is only made possible through the same technology that led to this predicament in the first place. Technologically-mediated forms of sensing reveal previously hidden patterns and relations at scales far larger and smaller (and faster and slower) than those available to human senses.

Humanity is unthinkable outside of technology; human history is a history of technologization. As human-machine assemblages, we have achieved previously impossible feats. Yet this human-driven technologization affects far more than just the human species. Our existence on this planet is also deeply enmeshed with nonhumans. While the term “nonhuman” often refers to other living organisms (such as plants and animals), the nonhuman also encompasses all of the abiotic yet agential systems giving shape to the world: natural phenomena, geographic features, weather patterns. All of these entities are deeply affected by technology and increasingly unthinkable outside of it. Why then do they have so little agency in its operation?

Rather than omitting nonhumans from the technological infrastructure which senses, processes, and modifies the planet, what if nonhumans were also enmeshed with these systems? What is technology outside of human instrumentality and control? Imaginaries of anthropocentric relations such as human-nonhuman or human-machine are pervasive, but there are fewer examples of what nonhuman-machine relationships could become. In “The Land That Owns Itself” by the Center for Democratic and Environmental Rights (CDER), land is transformed from human property to being self-owned by nature. This grants certain rights under the law, but how is self-ownership actually realized? Projects such as terra0 put forth a framework for technologically-augmenting ecosystems such as forests so they may act as agents within their own right. These and other nonhuman-machine systems present the beginnings for granting nonhumans more agency.

As a multi-modal, planetary-scale foundation model, the Whole Earth Codec becomes a vehicle for human-nonhuman-machine communication, allowing nonhumans to reassert their needs and become active agents in co-determined technological evolution. The Codec bypasses existing binaries of top-down/bottom-up and serves as a distributed yet centralized model that hooks into earth systems and moves all parties towards more equilateral relations.

It is able to do so by:

Aggregating. Machine learning models wield the ability to compress massive amounts of data. The totality of planetary information has been previously incomprehensible to a single “mind,” but can now be compressed and processed by a synthetic one. Compression smooths away detail yet allows for larger contours to emerge: statistical entanglement in the form of cross-modal embeddings becomes the means through which more balanced planetary-scale entanglement might be achieved. Just like the Talk to the City project by the AI Objectives Institute, the Codec synthesizes the needs, wants, and hopes of both humans and nonhumans. By aggregating these needs, it makes them newly comprehensible and thus actionable. This process does not have to be viewed as a centralizing force; rather the Codec could be subdivided into localized, speciated models that communicate with each other.

Connecting. While species have always lived alongside one another in dense webs of cause-and-effect, interspecies communication has never been possible. By permitting information exchange across previously silo-ed populations, needs can be expressed, greater awareness can be formed, and relationships can be strengthened. These connections can span nonhuman-nonhuman communities as well instead of simply bridging humans and nonhumans.

Evolving. Initially, the Codec is able to preserve current ecosystems and persist existing patterns by learning the intricacies of historical data of the planet: how things have been. Planetary maintenance is thus the base step, but the longterm goal of the Codec should be to guide continuous planetary evolution, driven by novel nonhuman and human input. These objectives can be plural and contradictory: increasing biodiversity, lowering carbon impact, avoiding genetic stagnation. Machine learning models are capable of multi-objective optimization that is difficult for biotic brains, and the Codec could take the aggregate needs of all planetary systems and inhabitants under consideration. For now, we can assume scarce resources and dynamic change: not every organism can live, yet we are interested in propelling the entire ecosystem forward rather than maintaining the status quo. To effect change, the Codec puts forth policy at different scales and temporalities: some may apply to a city or to the entire planet, some may apply for a day or for 50 years, some may apply to fishermen or those who own diesel cars.

The Whole Earth Codec is not imposed on the earth from the outside but rather permeates it in the way that all technological instrumentation has. Planetary information is soaked into the Codec, which in turn dissolves into techno-ecosystems, blurring the line between solute and solution. It actively steers and is steered by the complex ecologies it is entangled with.

Post-Anthropocene: Humans re-integrated with planetary ecologies

With the Whole Earth Codec taking on a new role in planetary relations—providing a layer of distributed cognition that is capable of planetary-scale aggregation and optimization—humans can cede myopic control over the earth. Existing attempts by humans to ameliorate issues such as ecological devastation have been limited by their informatic shortcomings, resulting in siloed strategies that flatten nuanced interdependencies, or resignation in the face of the sheer complexity of how planetary-scale crises like climate change unfold. The unique affordances of the Codec might enable us to move beyond previously unsuccessful attempts to make meaningful change at scale.

Current ecosystem management paradigms seek to maintain long-term sustainability while fulfilling human needs. Unable to grapple with the complexities of ecological systems, blunt human policies that seek to control nature often lead to unintended consequences like a decline in ecological resilience. More reparative strategies such as conservation or rewilding belie an undercurrent of stasis or reversion, respectively. Conservation techniques, which seek to ward off extinction and biodiversity loss, usually take the form of protecting specific species. They aim for survival-level recuperation without significantly infringing upon human behavior; think wildlife corridors along miles of freeway. More radical policies of ecological restoration like rewilding seek to reduce human influence on natural ecosystems, trading human interventions in favor of natural processes. Yet this line of thinking is also misguided, reifying a nature-culture divide and a hinging on a fantasy of return to some unblemished slate. Rather, we should chart a way forward with technology that scaffolds new possibilities for humans and nonhumans alike. By understanding the multi-dimensional relations on the earth, the Codec could address challenges within ecosystem management such as poor monitoring or unintended effects. It could surface sites of ecological devastation and indeed reveal new forms of devastation that we currently do not understand. Of course, rather than simply revealing such issues for humans to act upon, it could also wield more agency to directly ameliorate these issues.

Currently, both state and non-state actors are tasked with setting and enforcing ecological limits that determine what humans can do to the earth. These limits are derived from models constructed from years of piecemeal and laborious research, propagating slowly through institutions and policy. The result is often fragmented, lacking the broad scope through which planetary limits must be conceived.

Related to the difficulty of determining limits is the challenge of articulating them. There are more slippery, ill-defined ways that resource extraction is impacting the planet than can be easily distilled to human-governable metrics. Existing institutions are not capable of overseeing fuzzy restrictions that are context-specific or fluidly changing, and any enshrined metric becomes inflexible and subject to Goodhart's Law.

While all of these efforts are commendable, they lack enforceability: they are subject to the whims of political cycles and anthropocentric short-sightedness. The models which detect harm cannot action upon their findings. From the Paris Agreement to more recent global pacts made at COP to limit global temperature to below 2 °C over pre-industrial levels have largely been unenforceable, as the world remains off track to achieve targets. Smaller scale regulations within industries like mining, fishing, or drilling are overly reliant on self-reporting and spot-checks.

The Codec attempts to move beyond this unenforceability. Rather than reamining severed from the world, the model is directly integrated within its processes: ecological limits build into design tools rather than imposed from the outside. Much like GPT has been integrated into a variety of language-based applications, the Codec could embed ecological intelligence within the tools used to give shape to the world, from architecture design software, to fishery management , or mining and resource extraction tools. This allows governance to be integrated into the very processes of modifying the earth, rather than imposed posthoc as difficult-to-enforce limitations outside of design processes. This could take the form of automatic constraints built into design tools , limiting the possibility space to better match the contours of complex, multivariate ecosystems as modeled in simulation. Through its statistical processing, the Codec can reveal adverse impacts on parts of the ecosystem and identify necessary limitations that must be placed. These limitations are flexible, applied not uniformly throughout a population but attuned to specifics. Through its perpetual sensing and processing of data, the Codec could maintain an open-ended governance of ecological limits.

Fishing Management, 2050

Rather than pre-planned static routes and seasonal quotas, the Codec generates dynamic “fisherity” maps and schedules that mitigate destructive overfishing practices.

These maps and schedules indicate the optimal time periods, geographic zones, and sustainable catch volumes for fishing activity in a given area based on real-time data and predictive modeling of factors related to the overall marine ecosystem health.

Some major commercial fishing nations refuse to adopt the Codec’s dynamic fisherity system. They continue to operate on their traditional pre-scheduled routes and fixed quotas in the territories they claim economic exclusion over.

The Codec detects that these rogue nations are overfishing and depleting stocks beyond sustainable levels, the Codec lacks any real enforcement capability for actors that deviate from the schedule. Instead, it automatically re-routes legal fishing activity away from the overfished areas, allowing them to recover over time.

There is criticism from international fishermen and organizations who have agreed to follow the Codec's fisherity system. They feel it is unfair that they are forced to abide by the maps and fishing rules while the rogue nations continue to overfish without accountability.

Surprisingly, rogue fishing nations see their economic yields diminish rapidly compared to fisherity-compliant competitors who are able to access more productive waters. Some rogue fishermen retaliate by attempting to physically disable or spoof some of the Codec’s offshore sensor networks.

Over time, adherence to the Codec's fisherity system becomes a commercial advantage and reputational necessity for fishing operators wanting to access prime areas replenished through sustainable practices. The rogue nations find themselves frozen out, stuck plundering increasingly barren waters.

Architecture Software, 2028

Architects integrate the Codec into their software design tool. Each site plan is given a dynamic inference profile. Designers are given a set of constraints that limit the available materials, dimensions, and site layouts that they can use to build a project.

In Turkey, an airport terminal proposal is abandoned because designers can't select concrete as a material in the interface. The Codec analyzes that any significant use of concrete at the site would destroy a crucial watershed nearby. The Codec reasons that a diminishing watershed contributes to high thermal mass in the summer months, increasing temperatures that are uninhabitable for a variety of flora and fauna in the region.

The inference profile, in addition to its constraints, provides alternative concessions for development frameworks that reflect favorable forms, optimal resources, and development strategies in line with regional metabolic processes.

In Vietnam, developers are authorized to build a high-speed railway with the condition that developers agree to push terracing practices and plant cover crops across barren regions that have faced severe land degradation.

Developers are skeptical. They believe that the model's integration would only complicate and limit their ability to contribute to larger, more urgent development projects. Designers running up against these hard limits express that the constraints impede on their agency to ideate and create freely.

In India, weaving pathways emerge across dense residential neighborhoods. Residents became aware that these large columns cutting through parts of the neighborhood is a crucial migratory corridor for thousands of cranes that feed upon the cyperaceae weed abundant to the area every November.

As more projects are made using the Codec's inference profiles, public perception of the design paradigm changes. Architects are challenged to create more novel constructions and, over time, emergent structures informed by the codec reveal compelling phenomena about the surrounding ecosystem that could previously never be observed.

The Whole Earth Codec thus creates the paradox of:

- enabling a self-regulating ecosystem that removes humans from the loop

- enabling a deeper interdependence between humans, nonhumans, and the ecosystem

Removing humans from their top-down and often myopic control of ecological systems presents a new opportunity for a symbiotic interdependence amongst all parties inhabiting the planet. When we are barred from making unilateral decisions about earth systems, we become more attuned to the ecologies around us and more beholden to planetary needs. What might this new entanglement of human, nonhuman, and planetary systems look like?

Initially, the Codec spreads awareness among humans as we tune in more to the ecosystem around us. As human-nonhuman communication proliferates , we become more aware of the needs of nonhumans and the ecosystem. Needs can be directly expressed in human-legible terms, like a tree specifying that it needs to be watered, but new forms of expressing desires may also take shape. Awareness here could encompass both directly communicated needs and also more general “feeling” honed from being more interconnected with the complex ecological systems around us. Beyond greater awareness alone, which relies on human willingness, the Codec also enforces stricter imperatives around respecting ecological boundaries. Humans are more beholden to planetary restrictions and rub up against the specific impacts of their actions.

Whale Communication, 2031

Scientists train a fine-tuned version of the Codec on a vast amount of multimodal data concerning whale behavior. The model becomes capable of interpreting whale speech into human language.

The world is captivated. Initial findings reveal complex social structures, emotional depth, and even elements of what could be termed 'whale culture'. Audio recordings of translated whale songs find their ways into Tiktoks, DJ sets, and pop songs.

Conservationists and animal activists champion the development, assuming that this will lead to increased empathy and cause a broader societal shift towards increased animal welfare. Others condemn this as an exploitative violation of whale privacy: do humans have the right to decode and interpret whale communications without their consent?

Entrepreneurial companies capitalize on the shift, developing generative models and underwater hydrophones that broadcast synthetic whale vocalizations in an attempt to talk back to the whales. "Whale communication experiences" are offered and tourism booms, leading to increased marine traffic causing stress on whale populations. Communication is often unsuccessful, suggesting there is something fundamental outside of the scope of the model. Regardless, synthetic vocalizations begin to profoundly alter whale migration patterns and breeding behaviors, leading to unpredictable transformations in ecosystems and food chains.

A Codec Improvement Proposal for stricter restrictions on API access for generative purposes gains traction in the public forum. Public opinion remains split over the matter, but during a voting round, a quadratic mechanism nonetheless allocates a portion of Codec funds towards the build-out of new governance mechanisms into the Codec that restrict non-research-based uses moving forward.

The Codec could, for example, impose particular constraints around supply chains involved in the extraction, manufacturing, and purchasing of goods. This does not make planetary restrictions simply the problem of individuals: when corporations want to build something that levies a cost on the planet, the Codec would embed constraints into their processes. As the cause-and-effect of our actions enter our awareness, especially as we hit hard limitations, we reach a deeper level of understanding of our planetary interdependence.

The process of humans ceding ecological agency to the Codec will encounter messy intricacies. As the global response to climate change has demonstrated, human behavior is difficult to change even with indisputable knowledge that change must happen. Traditional cultural legacies should also be taken into consideration by the Codec in the process of need aggregation ; like permission for indigenous people in Alaska to continue whaling, the policy set by the Codec should arrive from a process of context-specific negotiation. This negotiation must occur between human desires and that of nonhuman organisms and systems; a delicate balance must also be struck between agential desires and ecological imperatives. Any such imperatives should not imposed at the end but rather built into the processes through which our desires manifest. As a technological interface plugged into human and nonhuman communities alike, the Whole Earth Codec creates a new interdependence between biotic and abiotic organisms, systems, and lifeforms.

Collective Iteration, 2048

The Codec operates on a physical infrastructure distributed throughout the world. When parts of the sensor network are down, damaged, or in need of expansion, the Codec notifies the nearby nodes in the network. The Codec identifies a lack of high-resolution bioacoustic data in Eastern Europe. It automatically generates a proposal to build-out new bioacoustic sensors between Estonia and Bulgaria and a data center in Romania.

After determining that Australia has the world's most abundant and least ecologically detrimental reserves for zinc, lead, and lithium, the codec automatically notifies each node member in the network of its findings with plans for roll-out. Member nodes near the Australian region are tasked to notify the Australian government of the planned mining operations.

Local community organizers raise concerns about the Codec's extractivist logic, citing violations of indigenous rights and environmental justice principles. Several of the organizers draft a Codec Improvement Proposal for changes to the way the Codec weighs traditional cultural legacies. The proposal gains traction within the wider international community.

Discussions in the network's forum range from ways to hardcode core alignment goals for the model to account for indigenous knowledge and rights, to ways of “teaching” the model about practices that cannot be objectively sensed and transduced for the codec to understand. Some on the forum worry about the extent of how much the Codec really understands human needs and desires and are skeptical that the Codec may be over prioritizing the nonhuman. Others are critics of how the Codec reinforces nation-state control, by notifying governments instead of the local people whose lives would be impacted by new mining operations. The authors of the proposal draft include updates using the feedback and insights gained from community deliberations.

The Codec generates a synthesized proposal based on its original build-out proposal with the improvement proposal. The new proposal suggests core changes to the Codec's extraction protocol, rerouting a portion of the mining operations from Australia to Chile. In addition, the proposal introduces a protocol for benefits sharing and tasks regional nodes to notify local communities impacted by new network build-outs, and strongly encourages bottom-up access and contribution to the Codec.

Although the Codec's latest proposal makes concessions by redistributing some mining operations to Chile, mining plans in Australia are still put into motion. The community organizers in Australia are baffled. Considering all the outrage against extraction in Australia that was comprehensively documented in their proposal, how could the Codec still decide to move forward to mine in Australia?

The build-out plan in Eastern Europe is successful after having gone through several proposal iterations. A percentage of profits from the applications built using high resolution bioacoustic data in Europe are partially redistributed into a trust. Funds in the trust are reserved for maintenance and future build-outs of the Codec while the remaining portion is redistributed to sites of extraction in Australia and Chile. These funds go towards land rehabilitation and potential roll-out proposals for local communities to coordinate new network build-out through grassroots efforts.

After altering the extractivist protocols, the Codec highly suggests grassroots efforts to build-out the network, especially in resource-rich regions that may be highly demanded for extraction. That's because areas that the Codec indicates as optimal for resource extraction are often the same sites that lack a robust sensor network that allow the Codec to make more meaningful and equitable decisions.

Bottom-up build outs proliferate. It becomes practice to build-out small scale sensor networks with high quality sensors in frequent extraction zones to ensure the Codec is performing mutual considerations to all stakeholders involved, human and nonhuman.

New symbionts of human, planet, machine

Ultimately, the Whole Earth Codec suggests a new symbiosis between humans, nonhumans, and machines. What might this symbiosis look like? What new forms might it propel us towards? The evolution of the biosphere has always been driven by an evolution of information processing. The addition of the Codec’s technological interleaving is not the sudden imposition of an alien computation onto the planet but rather the latest evolution of the planet’s ability to process information about itself. Published in 1995, John Maynard Smith and Eörs Szathmáry’s seminal evolutionary biology work, “The Major Transitions of Evolution,” puts forth a hypothesis for why evolution has led to ever more complex lifeforms.

From prokaryotes to eukaryotes, protists to multicellular organisms, individuals to colonies, each major transition is predicated on both a new unit of individuality as well as a new method of information processing. These more complex methods of information transmission allow for smaller entities to come together to form larger entities, specializing in their abilities as they become part of a greater whole. Eventually, the smaller entities are unable to replicate without the larger individual, and the fundamental “unit” of the individual changes.

This theory gives us an idea for what a Codec-induced symbiosis may become: the co-evolution of human and nonhuman organisms towards more complex, higher-order forms. This would involve the human and nonhuman, nature and machine, at the level of individuals, colonies, and systems. Perhaps the Codec would enable a new form of hereditary information transmission that is able to encode more complexity than any medium thus far, allowing for a new major transition in evolution. Organisms of ascending complexity may emerge through endosymbiosis, each consisting of biotic, abiotic, and machinic organs.

These new organisms emerge as new forms of coordination become possible via the Codec. By opening up communication between humans and nonhumans, nonhumans and nonhumans, and every organism with the machine, the communication medium itself evolves as communicating parties do. As complex concepts can be expressed via information exchange, they can in turn bootstrap higher complexity by conveying further meaning in more articulate language. In turn, this allows for more efficient coordination between parties, whether human or nonhuman. New and shifting groupings, colonies, assemblages, and nested hierarchies take form, mediated by the Codec. The notion of the individual is blurred and under constant transformation. Through the informatic interweaving of human and nonhumans, perhaps the planet itself emerges as a super-organism with its own goals.

In the beginning, a coordinating group may contain generalized members, such that each one is capable of doing all tasks. However, since all organisms have different capabilities, some are naturally more adept than others within their niches. Specialized subgroups slowly appear. This specialization occurs at different levels: just as biological organisms are composed of organs, subdivided into cells, subdivided into organelles, the larger organisms can also be part of teams within clans within populations. More fluid compositions enabled by the Codec could allow for dynamic recompositions at every level. These symbiotic groupings themselves will undergo processes of specialization and perhaps speciation. With the pooled knowledge of all these entities in correspondence, new ways of thinking, coordinating, organizing, modeling, and understanding might develop.

Under the imminent threat of climate change, visions for the future of the planet have been limited to mere disaster aversion. There has been little opportunity to articulate future-looking policy of what the earth could become outside of this restrictive vision. From carbon dioxide removal through sequestration to solar radiation modification via aerosol particles, large-scale technological visions for the planet are focused on mitigating the effects of climate change. Holly Jean Buck discusses the false binary of geoengineering: between industrial, extractivist technosolutionism on one hand and restorative climate justice and degrowth on the other. The myth of this binary prevents us from viewing nature as always inexorably intertwined with the attempts of humans and nonhumans alike to recursively modify it; technology is just another agent at play within the dynamics of the planet.

The Whole Earth Codec becomes the new solvent for human-nonhuman interdependence at planetary scale.

Studio Researchers

Connor Cook

Christina Lu

Dalena Tran

Program Director

Benjamin Bratton

Studio Director

Nicolay Boyadjiev

Associate Director

Stephanie Sherman

Senior Program Manager

Emily Knapp

Network Operatives

Dasha Silkina

Andrew Karabanov

Art Direction

Case Miller

Sound Design

Błażej Kotowski

Graphic Design

Callum Dean

Voiceover Engineer

Sam Horn

Editor

Guy Mackinnon-Little